11 1B: Lab 4

11.1 Pre-class activities

For your final Level 1 pre-class activity (hurrah!), we’re going to introduce a few new functions to show you some other things that R can do and that will also reinforce your understanding of probability.

11.1.1 Simulation

One of the most powerful features of R is that you can use it for data simulation. Data simulation is the act of generating random numbers that follow a certain distribution or have known properties. This might not sound particularly impressive, but simulating data means that you can do things such as plan your statistical analyses, understand and demonstrate how probability works, or estimate how many participants you need to test in your experiment based upon what you think the data will look like. Data simulation uses the different types of distributions that we covered in Lab 3 to generate data, so make sure that you’re happy with the probability chapter before you move on.

11.1.2 Activity 1: sample()

Just like Lab 3, all the functions we need for simulation are contained in Base R, however, we’ll also load the tidyverse so that we can wrangle our simulated data.

Let’s start by introducing the sample() function, which samples elements (data points) from a vector (a collection of things that are of the same type, like numbers or words). We can use sample() to simulate flipping a coin and build some of the graphs you saw in the probability chapter. sample() is used when we want to simulate discrete data, i.e., nominal or ordinal data.

sample() requires you to define three arguments:

x= a vector of elements, i.e., all of the possible outcomes. For our current example, this would be HEADS and TAILS.

size= how many samples do you want to take, i.e., how many times do you want R to flip the coin?

replace= specifies whether we should sample with replacement or not. In the last lab we used the example of pulling names out of a hat. If you put the name back in the hat each time you pulled one out this would be with replacement, if you don’t put the name back in this would be sampling without replacement. Basically, do you want to be able to get the same outcome on different samples? For a coin flip, it should be possible to get the same outcome more than once, so we specifyTRUE. If we specifiedFALSEyou could only draw as many samples as there were unique values, so in our case we could only flip the coin twice: once it would land on heads, once on tails, and then we would run out of outcomes.Open a new R Markdown document, name it “Pre-class 4” and save it in your Psych 1B folder.

Copy, paste, and run the below code in a new code chunk to simulate flipping a coin 4 times (and load the

tidyverse).

# Notice that because our event labels are strings (text),

# we need to enter them into the function in "quotes"

library(tidyverse)

sample(x = c("HEADS", "TAILS"), size = 4, replace = TRUE) ## [1] "HEADS" "HEADS" "TAILS" "TAILS"How many heads did you get? Don’t worry if it’s different to our example. Run the code again. How many heads did you get this time? How many do you get on each turn if you run the code five more times?

When you perform data simulation, you’re unlikely to get the same outcome each time you take a sample, just like if you flipped a coin 4 times on 5 separate occasions you would be unlikely to get the same number of heads each time. What’s so useful about data simulation is that we can use the outcomes from lots of different sampling attempts to calculate the probability of a particular outcome, e.g., getting 4 heads from 4 flips of a coin.

So that we can add up the number of heads and tails more easily, let’s simulate those coin flips again, but using numerical codes, 1 = HEADS, 0 = TAILS.

- Now that the outcomes are numeric, we don’t need the combine function

c

- 0:1 means all numbers from 0 to 1 in steps of 1. So basically, 0 and 1. If you wanted to simulate rolling a die, you would write

1:6which would give you all the whole numbers from 1 to 6.

## [1] 0 1 1 011.1.3 Activity 2: sum()

Now that we’re using ones and zeroes we can count the number of heads by summing the values of the outcomes. The below code will sample our coin flips as above, and then count up the outcomes. Because we’ve coded heads = 1 and tails = 0, we can interpret the sum of all the outcomes as the number of heads.

- Copy, paste and run the code below in a new code chunk.

# This code pipes the output of sample() into sum() which counts up the number of heads/1s.

sample(x = 0:1, size = 4, replace = TRUE) %>% sum()## [1] 2Run this function multiple times (you can use the green markdown play arrow at the top right of the code chunk to make this easy). In our simulation of five sets of four flips we got 1, 3, 2, 2, and 3 heads. So in only one out of the five simulations did we get exactly one heads, i.e., a proportion of .2 or 20% of the time.

11.1.4 Activity 3: replicate() 1

Let’s repeat the experiment a whole bunch more times. We can have R do this over and over again using the replicate() function. replicate() requires two arguments (although there are other optional arguments if you want to do more complicated tasks):

n= the number of times you want to repeat your code

expr= the expression, or code, you want to repeat

Copy, paste and run the below code into a new code chunk to run the sample function and sum up the outcomes 20 times.

## [1] 1 3 4 1 1 1 2 3 1 2 1 2 2 3 4 1 1 2 3 211.1.5 Monte Carlo simulation

Every year, the city of Monte Carlo is the site of innumerable games of chance played in its casinos by people from all over the world. This notoriety is reflected in the use of the term “Monte Carlo simulation” among statisticians to refer to using a computer simulation to estimate statistical properties of a random process. In a Monte Carlo simulation, the random process is repeated over and over again in order to assess its performance over a very large number of trials. It is usually used in situations where mathematical solutions are unknown or hard to compute. Now we are ready to use Monte Carlo simulation to demonstrate the probability of various outcomes.

11.1.6 Activity 4: replicate() 2

We are going to run our coin flip experiment again but this time we are going to run the experiment 50 times (each including 4 coin tosses), and use the same principles to predict the number of heads we will get.

- Copy, paste, and run the below code to run the simulation and store the result in an object `heads50** using the code below:

## [1] 3 2 3 4 2 1 2 2 2 2 4 1 3 2 1 3 3 1 3 3 1 2 2 2 2 2 1 2 4 0 2 0 2 3 3 2 1 2

## [39] 2 0 3 2 2 2 4 1 3 3 4 311.1.7 Activity 5: probability

We can estimate the probability of each of the outcomes (0, 1, 2, 3, 4 heads after 4 coin tosses) by counting them up and dividing by the number of experiments. We will do this by putting the results of the replications in a tibble() and then using count().

data50 <- tibble(heads = heads50) %>% # convert to a table

group_by(heads) %>% # group by number of possibilities (0,1,2,3,4)

summarise(n = n(), # count occurances of each possibility,

p=n/50) # & calculate probability (p) of each| heads | n | p |

|---|---|---|

| 0 | 3 | 0.06 |

| 1 | 8 | 0.16 |

| 2 | 21 | 0.42 |

| 3 | 13 | 0.26 |

| 4 | 5 | 0.10 |

Your numbers may be slightly different to the ones presented in this book - remember that by default, each time you run a simulation, you will get a different random sample.

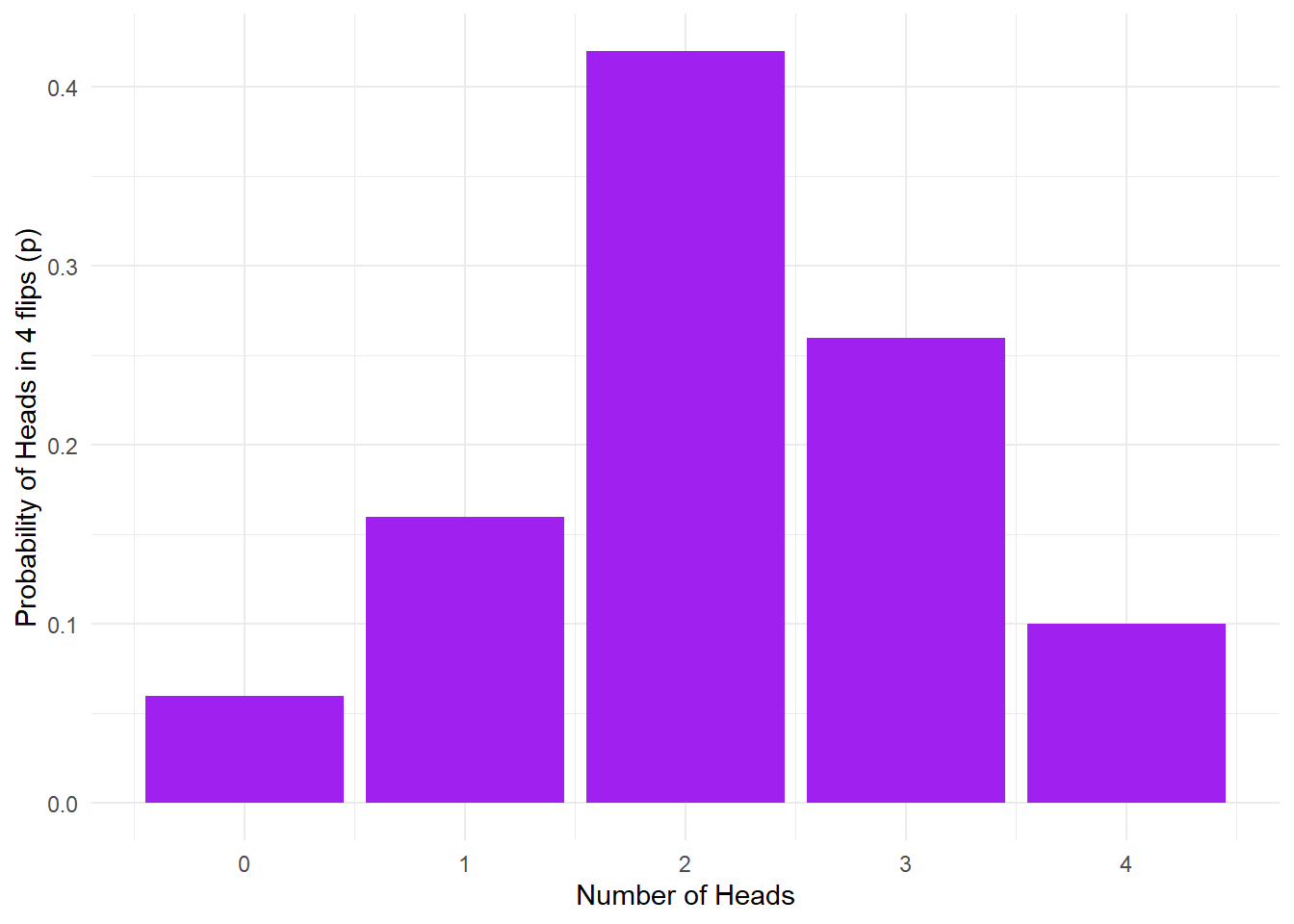

11.1.8 Activity 6: visualisation

We can then plot a histogram of the outcomes using geom_bar().

# Note: stat = "identity" tells ggplot to use the values of the y-axis variable (p) as the height of the bars in our histogram (as opposed to counting the number of occurances of those values)

ggplot(data50, aes(x = heads,y = p)) +

geom_bar(stat = "identity", fill = "purple") +

labs(x = "Number of Heads", y = "Probability of Heads in 4 flips (p)") +

theme_minimal()

Figure 11.1: No. of heads from 4 coin tosses probability outcomes.

Pause here and interpret the above figure

- What is the estimated probability for flipping 0/4 heads? 1/4 heads? 2/4 heads? 3/4 heads? 4/4 heads?

Unfortunately sometimes this calculation will estimate that the probability of an outcome is zero since this outcome never came up when the simulation is run. If you want reliable estimates, you need a bigger sample to minimise the probability that a possible outcome won’t occur.

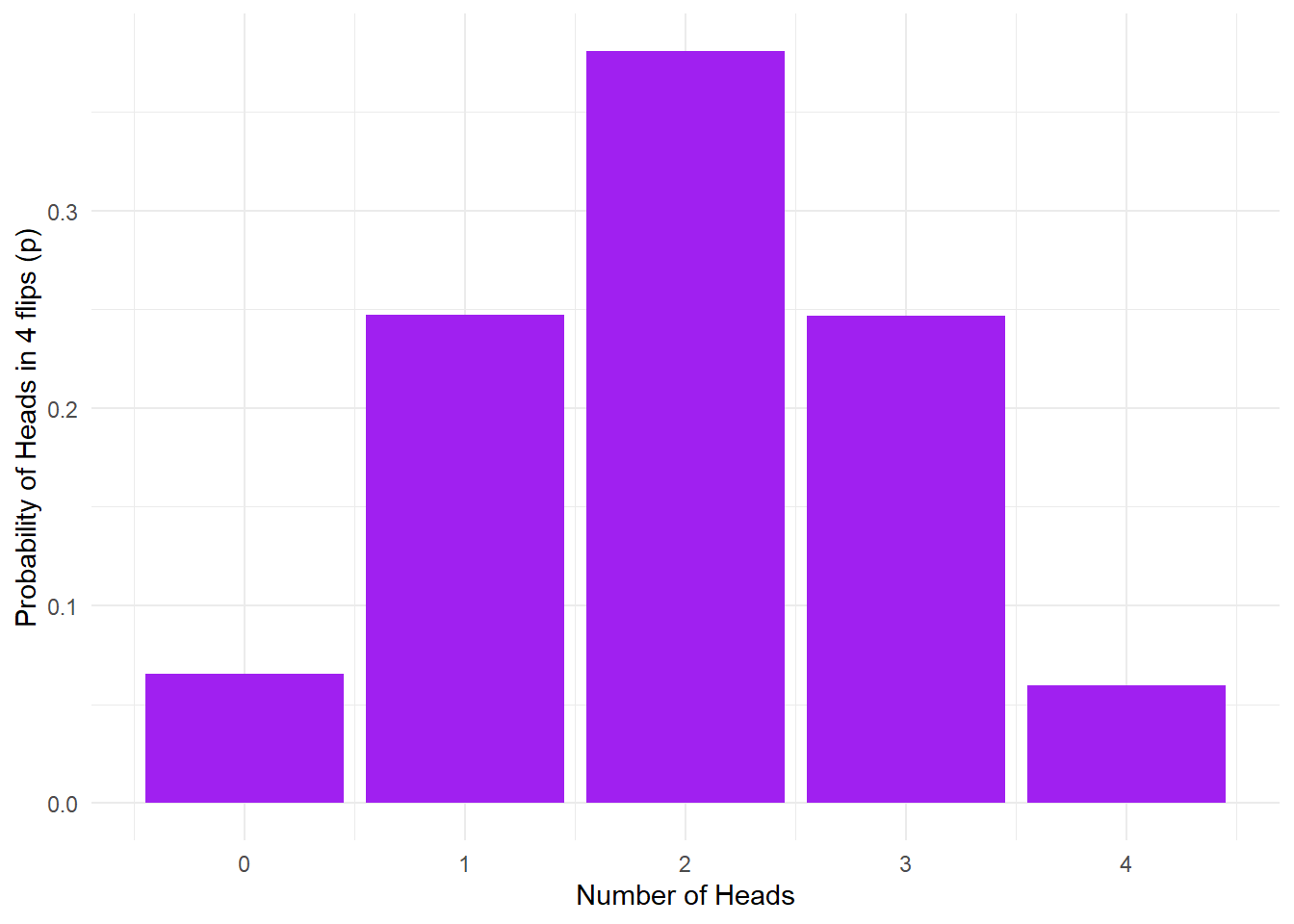

11.1.9 Activity 7: big data

Let’s repeat the Monte Carlo simulation, but with 10,000 trials instead of just 50. All we need to do is change n from 50 to 10000.

Again, we’ll put the outcome into a table using tibble and calculate counts and probabilities of each outcome using group_by() and summarise(). Remember to try reading your code in full sentences to help you understand what multiple lines of code connected by pipes are doing. How would you read the below code?

Finally, we can visualise this as we did earlier.

ggplot(data10K, aes(heads,p)) +

geom_bar(stat = "identity", fill = "purple") +

labs(x = "Number of Heads", y = "Probability of Heads in 4 flips (p)") +

theme_minimal()

Figure 11.2: 10K coin toss probability outcomes.

Using Monte Carlo simulation, we estimate that the probability of getting exactly one head on four throws is about 0.25. The above result represents a probability distribution for all the possible outcomes in our experiments. We can derive lots of useful information from this.

For instance, what is the probability of getting two or more heads in four throws? This is easy: the outcomes meeting the criterion are 2, 3, or 4 heads. We can just add these probabilities together like so:

## # A tibble: 1 x 1

## p2

## <dbl>

## 1 0.687You can add probabilities for various outcomes together as long as the outcomes are mutually exclusive, that is, when one outcome occurs, the others can’t occur. For this coin flipping example, this is obviously the case: you can’t simultaneously get exactly two and exactly three heads as the outcome of a single experiment. However, be aware that you can’t simply add probabilities together when the events in question are not mutually exclusive: for example, the probability of the coin landing heads up, and it landing with the image of the head being in a particular orientation are not mutually exclusive, and can’t be simply added together.

This is the basis of how we can calculate the probability of an outcome using a known distribution - by simulating a large number of trials we can use this as an estimate for how our data will look in the real world.

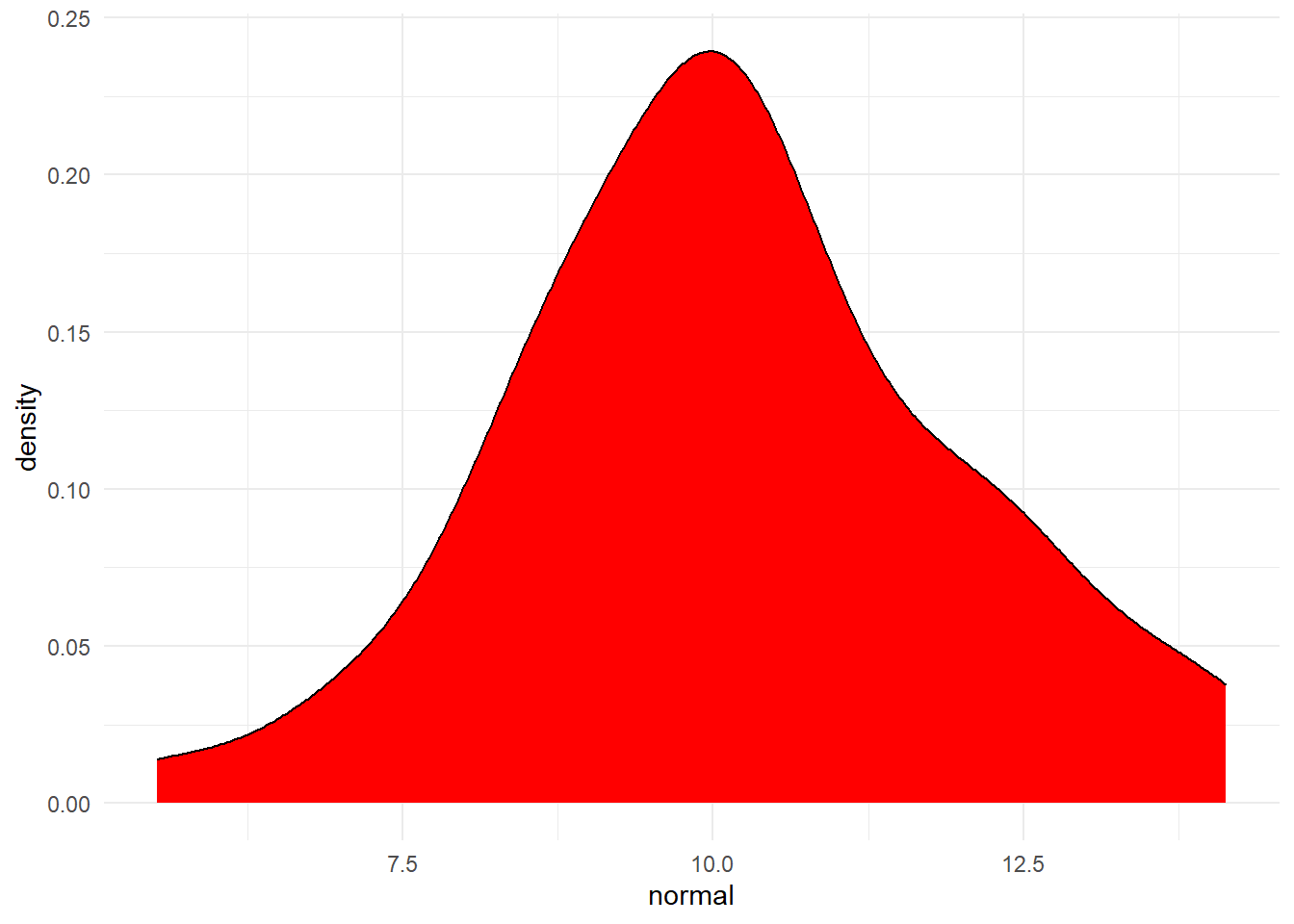

11.1.10 Activity 8: rnorm()

We can also use R to simulate continuous data that follow a normal distribution using rnorm(). You’ve actually used rnorm() before, all the way back in Lab 2 of Psych 1A but we’ll go over it again.

nis the number of data points you wish to simulate which is the only required argument forrnorm()meanis the mean that you want your data to have. If you don’t provide this argument,rnorm()will use a default value ofmean = 0.sdis the standard deviation you want your data to have. If you don’t provide this argument,rnorm()will use a default value ofsd = 1.

Copy, paste and run the below code in a new code chunk. This will randomly generate 50 numbers that collectively have a mean of 10 and a SD of 2 and then store it in the object normal.

You can check that the data you have generated are as you expected by calculating the mean and SD of this new variable - you shouldn’t expect the values to be exactly 10 and 2 (remember, it’s randomly generated), but they should be reasonably close.

## [1] 10.18396

## [1] 1.811044Finally, you can visualise your data with a density plot. Try changing the number of data points generated by rnorm() from 50 to 500 to 5000 and then see how the shape of the distribution changes.

tibble(normal = normal) %>% #turn the variable normal into a table and then

ggplot(aes(normal)) + # create a density plot

geom_density(fill = "red") +

theme_minimal()

Figure 4.4: Distribution of variable created by rnorm

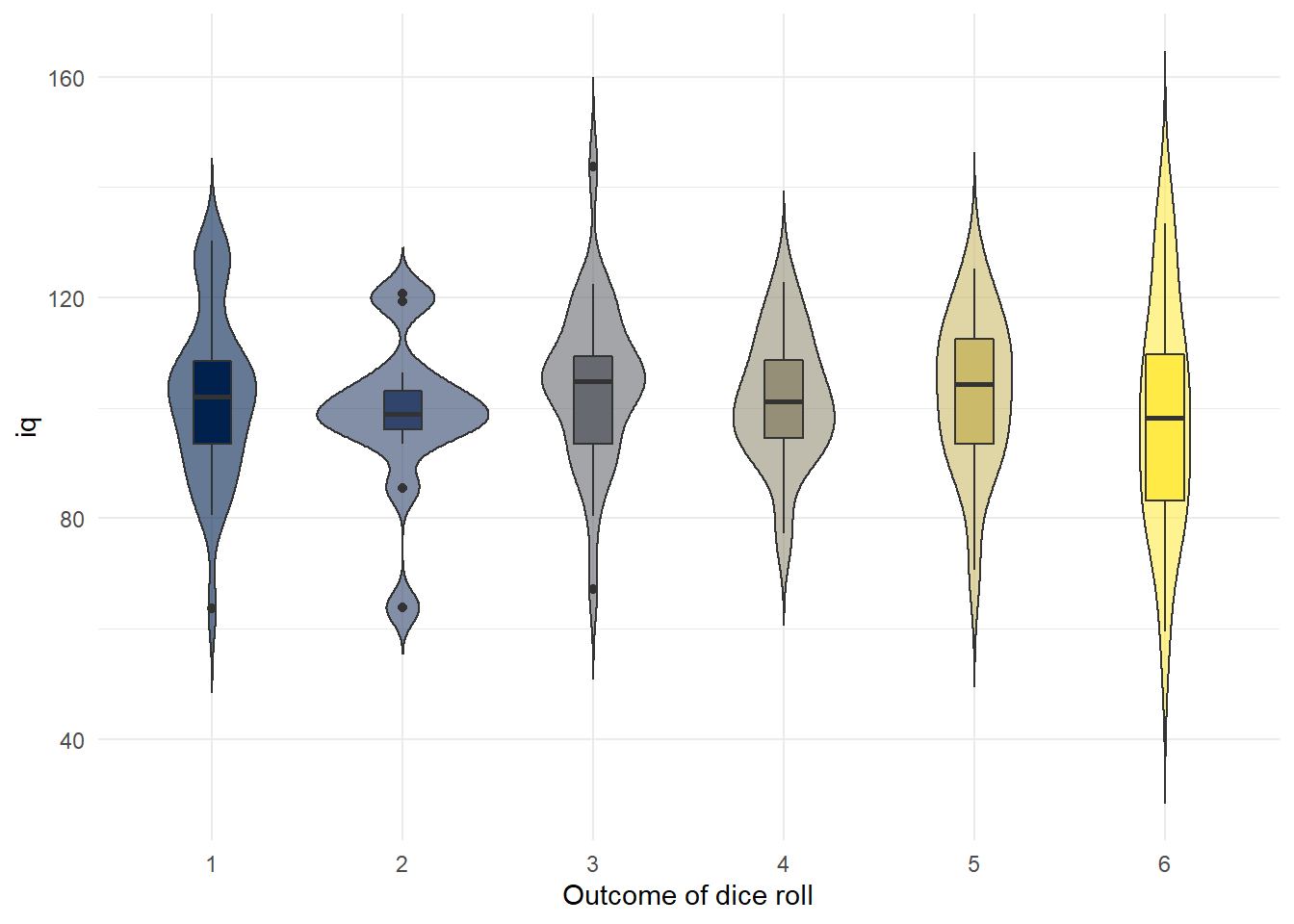

11.1.11 Activity 9: Simulate a dataset

Finally, we can put all of this together to simulate a full dataset. Let’s imagine that we’re going to run an experiment to see whether 120 people will roll a higher number on a die if their IQ is higher. This is obviously a stupid experiment but psychology does occasionally do stupid things.

- First, let’s create a variable that has all of our subject IDs. We’re just going to assign our participants numerical codes.

Then we’re going to create a column for gender using a new but simple function rep which stands for “repeat”. The below code will create a variable that repeats “man” 40 times, then “women” 40 times, then “non-binary” 40 times.

Next, let’s simulate them all rolling a die once using sample().

Then, let’s simulate their IQ scores. IQ scores are standardised so that overall, the population has an average IQ of 100 and a SD of 15 so we can use this information to simulate the data with rnorm().

Finally, we can stitch all these variables together into a table.

Now that we’ve got our simulated data we could write code to analyse it even before we’ve collected any real data which will not only save us time in the future, but will help us plan our analyses and we could include this code in a pre-registration document.

For example, you could create a plot of IQ scores for each dice roll (remember these are not real data…)

sim_data %>%

mutate(rolls = as.factor(rolls)) %>%

ggplot(aes(x = rolls, y = iq, fill = rolls)) +

geom_violin(trim = FALSE, alpha = .6, show.legend = FALSE) +

geom_boxplot(width = .2, show.legend = FALSE) +

scale_fill_viridis_d(option = "E") +

theme_minimal() +

labs(x = "Outcome of dice roll")

Figure 10.1: Boxplot of IQ scores grouped by what each person rolled on the die

11.1.12 Finished!

The in-class activities for Lab 4 are to analyse your group project data which means in terms of new stuff to learn, we’re done! In Psych 1A and 1B, we’ve tried to give you a solid introduction to common data skills you’ll need in order to conduct your own research. Even if you don’t intend to continue with psychology or quantitative research in the future, we hope that you’re proud of the skills you’ve learned. For some of you, R might not have been your favourite bit of the course, but you should be very proud of what you’ve achieved and with such a diverse class we hope you can see that programming isn’t an innate skill that only certain people can learn. It just take a a bit of work, some (hopefully) helpful teaching materials, and a lot of swearing at the error messages.

11.2 In-class activities

We’ve spent the last 6 months giving you the skills you need to be able to deal with your own data. Now’s the time to show us what you’ve learned. In this chapter we’re going to describe the steps you will need to go through when analysing your data but, aside from a few lines that will help you deal with the questionnaire data that the Experimentum platform spits out, we’re not going to give you any code solutions. Everything you need to do you’ve done before, so use this book to help you. Remember, you don’t need to write the code from memory, you just need to find the relevant examples and then copy and paste and change what needs changing to make it work for you.

We suggest that you problems-solve the code as a group, however, make sure that you all have a separate copy of the final working code.

11.2.1 Step 1: Load in packages and data

The data files are on Moodle. Remember, don’t share them in Messenger etc. because of data protection laws.

Don’t change ANY of the code from step 1 and 2. Just copy and paste it into R EXACTLY as it is below.

11.2.2 Step 2: Clean up the data

Run the below code - don’t change anything. This code will clean up the Experimentum data a little bit to help you on your way. You will get a message saying NAs introduced by coercion. Ignore this message, it’s a result of converting the employment hours to a numeric variable.

demo_final <- demo %>%

group_by(user_id, q_id) %>%

filter(session_id == min(session_id), endtime == min(endtime)) %>%

filter(row_number() == 1) %>%

ungroup() %>%

filter(user_status %in% c("guest", "registered")) %>%

select(user_id, user_sex, user_age, q_name, dv) %>%

pivot_wider(names_from = q_name, values_from = dv)%>%

mutate(employment = as.numeric(employment))

teams_final <- teams %>%

group_by(user_id, q_id) %>%

filter(session_id == min(session_id), endtime == min(endtime)) %>%

filter(row_number() == 1) %>%

ungroup() %>%

filter(user_status %in% c("guest", "registered")) %>%

select(user_id, user_sex, user_age, dv) %>%

rename("team" = "dv")

mslq_final <- mslq %>%

group_by(user_id, q_id) %>%

filter(session_id == min(session_id), endtime == min(endtime)) %>%

filter(row_number() == 1) %>%

ungroup() %>%

filter(user_status %in% c("guest", "registered")) %>%

select(user_id, user_sex, user_age, q_name, dv) %>%

arrange(q_name) %>%

pivot_wider(names_from = q_name, values_from = dv)Right. Your turn. Remember, coding isn’t about memorising code, it’s about knowing where to look for examples that you can modify with your new variables. You may find it helpful to use the search function in this book.

Figure 11.3: Searching for functions

11.2.3 Step 3: Join

Join together the data files by their common columns. The resulting dataset is going to have 91 columns which means that R won’t show you them all if you just click on the object, you’ll need to run summary(). Hint: You can only join two objects at once, so you’ll need to do multiple joins (in a pipeline if you’re feeling snazzy).

11.2.4 Step 4. Select your variables

Use select to retain only the variables you need for your chosen research design and analysis, i.e. the responses to the sub-scale you’re interested in as well as the user id, sex, age, team name, and any variables you’re going to use as criteria for inclusion. You might find it helpful to consult the MSLQ overview document from the Lab 1 pre-class to get the variable names.

11.2.5 Step 5: Factors

Use summary or str to check what type of variable each column is. Recode any necessary variables as factors and then run summary again to see how many you have in each group. You will find the code book you downloaded with the data files from Moodle helpful for this task. You may find the Psych 1A section on factors helpful for this.

11.2.6 Step 6: Filter

If necessary, use filter to retain only the observations you need, for example, you might need to delete participants above a certain age, or only use mature students etc. (and make sure you kept all these columns in step 4). Do not filter the data for your team yet. You will find the code book you downloaded with the data files from Moodle helpful for this task.

If your grouping variable is whether students undertake paid employment, you will need to create a new variable using mutate that categorises participants into employed (> 0 hours worked per week) and not employed (0 hours per week) categories.

An additional bit of syntax you might find useful for this is the %in% notation which allows you to filter by multiple values. For example, the following code will retain all rows where user_sex equals male OR female and nothing else (i.e., it would get rid of non-binary participants, prefer not to says, and missing values).

You can also do it by exclusion with !. The below code would retain everything where user_sex doesn’t equal male or female.

If you were feeling really fancy you could do steps 3 - 6 in a single pipeline of code.

11.2.7 Step 7: Sub-scale scores

Calculate the mean score for each participant for your chosen sub-scale. There are a few ways you can do this but helpfully the Experimentum documentation provides example code to make this easier, you just need to adapt it for the variables you need. You may also want to change the na.rm = TRUE for the calculation of means depending on whether you want to only include participants who completed all questions.

dat_means <- data %>% # change data to the name of the data object you want to work from

pivot_longer(names_to = "var", values_to = "val", question_1:question_5) %>% # change question_1:question_5 to the relevant variables for your sub-scale, don't change anything else

group_by_at(vars(-val, -var)) %>% # don't change this at all

summarise(scale_mean = mean(val, na.rm = TRUE)) %>% # change scale_mean to the name of your sub-scale, e.g., anxiety_mean

ungroup() # don't change this at all11.2.8 Step 8: Split the dataset

Next, use filter again to create a new dataset that only contains the data from participants who contributed to your team and call it dat_means_team. Once this is complete, you will have the final large dataset that contains the scale scores for all participants, and a smaller dataset that just has data from the participants you recruited. Use the codebook to find which number corresponds to your team.

11.2.9 Step 9: Demoraphic information

That should be the really hard bit done, now you’ve got the data in the right format for analysis.

First, calculate the demographic information you need: number of participants, gender split, grouping variable split (if you’re using a variable that’s not gender), mean age and SD.

You can calculate mean age and SD using summarise() like you’ve done before. There’s several different ways that you can count the number of participants in each group, we haven’t explicitly shown you how to do this yet so we’ll give you example code for this below. The code is fairly simple, you just need to plug in the variables you need.

Do this separately for the full dataset and your team dataset.

# count the total number of participants in the dataset

dat_means %>%

count()

# count the number of responses to each level of user_sex (for gender)

dat_means %>%

group_by(user_sex) %>%

count()

# count the number of responses to each level of mature student status (you may need to change this variable to the one you're using)

dat_means %>%

group_by(mature) %>%

count()

# count the number of responses across two categories (you might not need or want to do this)

dat_means %>%

group_by(user_sex,mature) %>%

count()Once you’ve done this you might realise that you have participants in the dataset that shouldn’t be there. For example, you might have people who have answered “Not applicable” to the mature student question, or you might have some NAs (missing data from when people didn’t respond).

You need to think about whether you need to get rid of any observationse from your dataset. For example, if you’re looking at gender differences, then you can’t have people who are missing gender information. You may have said in your pre-reg that you would only include non-binary people if they made up a certain proportion of the data. If you’re looking at mature student status, you can’t have people who didn’t answer the question or who said not applicable (i.e., postgrad students). You need to decide whether any of this is a problem, and potentially go back and add in an extra filter to step 6.

11.2.10 Step 10: Descriptive statistics

Use summarise and group_by to calculate the mean, median, and standard deviation of the sub-scale scores for each group. Do this separately for the full dataset and your team dataset.

11.2.11 Step 11: visualisation

You now need to create a bar chart with error bars, a violin-boxplot and a grouped density plot for both the full dataset and your team dataset. You’ve done all of these before, just find a previous example code and change the variables and axis labels.

It’s up to you how your present these in presentation. You might want to put all of the team graphs on one slide and then all of the overall dataset graphs on another. Or you might decide that it is better to put the same type of graphs on the same slide, to help comparisons. We want you to think about what will communicate the data best, so it’s up to you.

`