1 Intro to using AI

There are a range of AIs and an even larger number of niche apps and platforms but we’re going to focus on Microsoft’s Copilot signed in via your UofG account. The reason we’ve chosen Copilot is that all University of Glasgow students have access to the same plan and functionality. The UofG Copilot also has enhanced security, which is particularly important when you’re considering using AI to help with data analysis.

Since 2024, generative AI tools have become embedded in most productivity software (Word, Excel, PowerPoint, Teams, Google Workspace, Adobe Creative Suite). Whilst this book uses Copilot, you should expect to see AI features in many of the tools you already use.

1.1 A brief introduction

1.1.1 AI model vs AI platforms

There are more qualified people who can explain AI better than I, but a brief but important distinction is between the AI model and the AI platform. The underlying AI model is a Large Language Model (LLM) that essentially does extremely sophisticated predictive text - it’s not thinking, it’s not conscious, it just is very good at deciding what word is likely to come after another word in a way that is human-like.

Different software companies have created different AI models. OpenAI has a series of models it refers to as Generative Pre-trained Transformers (GPTs). The explosion in AI in terms of public awareness came about when OpenAI released GPT version 3 and at the time of writing, the most recent is GPT-5. The capability of each successive AI is claimed to increase significantly, both in terms of its ability (e.g., to pass standardised tests) and in the human-ness of its responses. In addition to GPT-5, other widely used models include Claude 3.5 (Anthropic), Gemini 1.5 (Google/DeepMind), and LLaMA 3 (Meta). Different platforms may give slightly different results because they are tuned differently.

Unlike earlier versions, most current models are multimodal by default: they can process text, images, and in some cases audio and video. Copilot inherits some of this, for example analysing images or diagrams when pasted in.

In some cases, the name of the model and the name of the platform you use are the same. ChatGPT is the name of the platform, but the underlying model it uses is GPT5. Additionally, other companies and apps can licence use of the models so Microsoft’s Copilot also uses GPT5.

It isn’t necessary that you understand all of this in detail. The key thing is that the underlying model is the same for some platforms (so you should expect similar capabilities) and that this model can and will update.

1.1.2 Ethics and AI

It’s beyond the scope of this book (and my expertise) to go into much detail about the ethics of AI but I want to acknowledge that in several ways, they’re hugely problematic.

First, and most importantly, AIs are trained on huge corpora of human language. Humans are full of bias and prejudice and consequently, so are our offspring AIs who have been repeatedly found to encode sexist, racist, and abelist views in their outputs.

Second, many of the developers that have produced AI models have done so behind closed doors and are very secretive about exactly what data the models have been trained on. One of the reasons for this is because it is very clearly apparent that many AI models have been given access to copyrighted sources (e.g., books and film scripts) and there are an increasing number of lawsuits being filed although it remains unclear what the future holds given how far and how fast the horse has already bolted.

Third, whilst the use of AI has been touted as a solution to help us manage climate change, it’s also a direct contributor to the problem because of the energy demands.

Fourth, training and moderation often rely on hidden human labour. Low-paid workers, often in the Global South, are employed to filter harmful content or annotate data. This raises ethical questions about labour exploitation.

Regulation is catching up: the EU AI Act will begin to take effect in 2025, and the UK is consulting on its own frameworks. This means that how we are expected to use AI responsibly may change rapidly. AI Now 2025 Report provides an annual overview of social, ethical, and political issues in AI.

1.2 Criticality and AI

When using AI, it is not enough to know how to operate the tools, you also need to approach them critically. That means asking not just “what can this do for me?” but also “how trustworthy is this output, and what are the wider implications of relying on it?”

Here are some of the things that should always be in the back of your mind when using AI.

1.2.1 Accuracy

AI tools are trained to generate fluent and convincing text, but this does not mean it is correct. They frequently “hallucinate”, producing confident but false information such as incorrect references, fabricated statistics, or misattributed quotes. You should treat outputs as drafts or starting points, not final answers and verify with authoritative sources.

1.2.3 Transparency

You cannot see the training data or the “reasoning” steps of the model. That lack of transparency makes it hard to know why it gave a particular answer or whether it is missing key perspectives. Ask yourself what might have been left out or under-represented, and be cautious about treating AI answers as comprehensive.

1.2.4 Epistemic Caution

Epistemic caution means being careful about what you claim to know. AIs generate text that looks authoritative, which can give a false sense of understanding. This risks shallow learning if students accept outputs uncritically rather than grappling with concepts themselves - do you really know it or can you just repeat what the computer told you? Use AI as a “thinking partner,” but make sure you can explain ideas in your own words without relying on its phrasing.

1.2.5 Equity and Access

Even though UofG provides Copilot securely, in wider society access to premium AI tools is uneven. This creates new digital divides between those who can afford subscription services and those who cannot, or between those trained to use AI critically and those left behind. Reflect on whose voices are privileged or excluded in AI-mediated work.

1.2.6 Over-reliance and deskilling

Outsourcing too much to AI can erode your own skills in writing, problem-solving, or coding. Just as calculators changed how we teach arithmetic, AI may change what we expect students to be able to do unaided. Use AI strategically, but keep developing your own capacity, especially in core academic skills such as argumentation, critical reading, and data analysis.

1.2.7 Copilot

Microsoft Copilot is not one single tool but a family of services (e.g., in Word, Excel, Edge, Outlook). In this book, we focus on Copilot Chat accessed via Office365 using your University account, because this is the secure, enterprise version.

Microsoft Copilot can be accessed at by signing into Office365 with your University of Glasgow student account. Whilst ChatGPT is the most widely known and used, the advantage of using your Enterprise account is that the data is processed more securely and what you input won’t be used to train the model.

This is really, really important and if you’re found to have uploaded sensitive data to an unsecure AI you can be accused of academic and/or research misconduct. So if you’re using AI for any University work, use Copilot.

1.3 Login

Login to Copilot using your University of Glasgow account.

At the time of writing (September 2025), in order to access GPT-5, you need to toggle this on so make sure you also click the button in the top right that says “Try GPT-5”.

Remember that “Copilot” is a family of tools. The link above takes you to Copilot Chat, which is the safest environment for coursework. Copilot in Word/Excel/PowerPoint may look slightly different but uses the same underlying model.

1.4 Prompting

The key difference between using an AI and a regular search engine is that you can shape the output of the AI by “prompt engineering”, which is the phrase used to describe the art of asking the question in a way that gives you the answer you want. It’s easier to show than tell.

Think of a book, TV show, film, or video game that you know really well. I chose Final Fantasy VII (the original PlayStation version). Ask Copilot to give you three different summaries for different audiences.

Ask it for a summary without any additional context e.g., “Give me a summary of Final Fantasy VII (original playstation version)”

Then, ask it for a summary but give it a steer on the intended audience, e.g., “Give me a summary of Final Fantasy VII (original playstation version) for someone who knows nothing about videogames” or “Give me a summary of Final Fantasy VII (original playstation version) for an expert gamer who has played it many times”

Finally, ask it for a summary, but give it a steer on how it should act, e.g., “Act as an expert videogame reviewer for the Guardian. Give me a summary of Final Fantasy VII (original playstation version) for an expert gamer who has played it many times” or “Act as someone who spends too much time on Reddit and thinks they know everything. Give me a summary of Final Fantasy VII (original playstation version) for an expert gamer who has played it many times”.

Reflect on the differences in the information and detail it gives you for each one and how you’re able to evaluate the accuracy of the summaries because of your existing expert knowledge.

1.5 Copilot features

Whilst many AIs use the same underlying model (e.g., GPT-5), the platforms can differ in their functionality.

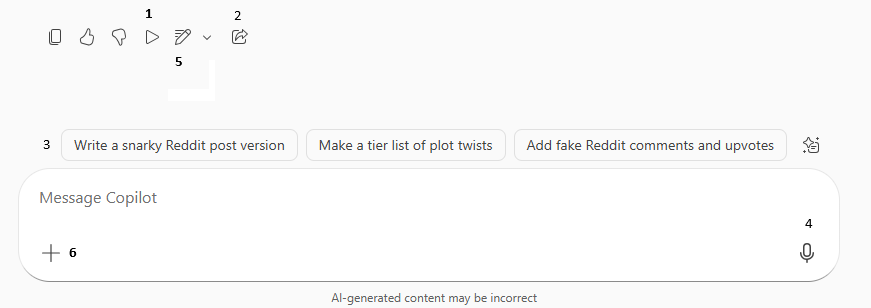

- The “Read Aloud” function will get Copilot to read it’s response to you.

- The “Share prompt and copy response” button will copy your prompt and the response which you can then paste into e.g., a Word document. This is useful because if you’re ever asked to provide more information on how you have used AI at University, you can use this feature to assist with being transparent.

- Suggested follow-up prompts give you ideas for further prompting (although I have never found these useful).

- The dictate function allows you to speak your prompt to the AI. On a laptop you may have to ensure your microphone settings allow access. This is often easier on a phone.

- “Edit in Pages” opens an editable page so you can add to and edit the response. You can also then ask an additional prompt and add new responses and share with others. We’re not really going to use this feature but if you want to know more, Microsoft have additional info and training.

- Add a file to Copilot to use as part of your prompt. Because you’re signed in using your UofG account it will automatically connect to your OneDrive. Even though Copilot connects securely to OneDrive, you should check your module handbook and lecturer guidance before uploading slides, datasets, or assignments. Not all materials are permitted to be shared with AI.

Try out each of the six features described above and reflect on which ones you find useful and why.

1.6 Be critical

Good AI use should strengthen, not substitute, your learning processes and your motivation to learn and this is what we’re going to try and convince you of throughout this book. Two additional ideas matter here.

First, self-determination theory suggests that high-quality learning is supported when activities nurture autonomy (choice and voice), competence (a sense of effectiveness), and relatedness (feeling connected to others or to authentic goals).

Second, self-efficacy grows primarily through mastery experiences: every time you attempt an answer first, then use AI to check, refine, and correct, you accumulate evidence that you can succeed again. Over-scaffolding (“just tell me the answer”) risks undermining both autonomy and self-efficacy.

Throughout this book we are going to ask you to reflect on how your use of AI might affect you psychologically because it’s not all about academic integrity and cheating, there are serious risks to you long-term success and resilience.

Reflect on the differences between the summaries - the technicality of the language, the accuracy and nuance of the information, what it chose to focus on, and what it chose to omit. The reason I asked you to create a summary of something you already know well is that you’re aware of where the gaps are. Remember this when you’re asking an AI something you don’t know as well. Just because you can’t see the gaps, inaccuracies, and biases, doesn’t mean they’re not there.

- Tell it how to act

- Tell it who you are

- Always check for gaps, errors, or bias

- Fluency ≠ truth and persuasive text may still be wrong

- Authority matters! Ask yourself “who should be the expert here?”

- Over-reliance risks deskilling. Keep practising your own academic skills